After director Vikramaditya Motwane and VFX studio philmCGI’s previous collaboration on the Prime Video series Jubilee, the duo came together again for the Netflix sci-fi thriller Ctrl.

Ctrl tells the story of Nella (Ananya Panday) and Joe (Vihaan Samat) who are the perfect influencer couple. But when he cheats on her, she turns to an AI app to erase him from her life — until it takes control.

Shot in screenlife format, the visuals of Motwane’s directorial showcasing the dark side of artificial intelligence (AI), is presented onscreen by Panday’s virtual messages and interactions. The unique aesthetics contributed to the plot and highlighted the world of online content creators and influencers. Pune-based philmCGI delivered a total of 945 VFX shots, covering the 99-minute long film’s 83 minutes of screen time. While Ctrl wrapped its shoot in just 16 days, its post-production journey spanned 16 months. A core team of 25 artists worked tirelessly, supported by a total of 99 artists who contributed at various stages of the project.

To understand how philmCGI’s work brought Motwane’s ambitious vision to life in the cyber thriller, AnimationXpress spoke to the studio’s co-founder and managing director Anand Bhanushali; managing director and visual effect supervisor for CTRL Arpan Gaglani; and creative director Kanchi Kanani who shared their team’s cumulative experience.

Ctrl reminds one of Black Mirror-style tech dystopia. What was the director’s requirement in terms of VFX?

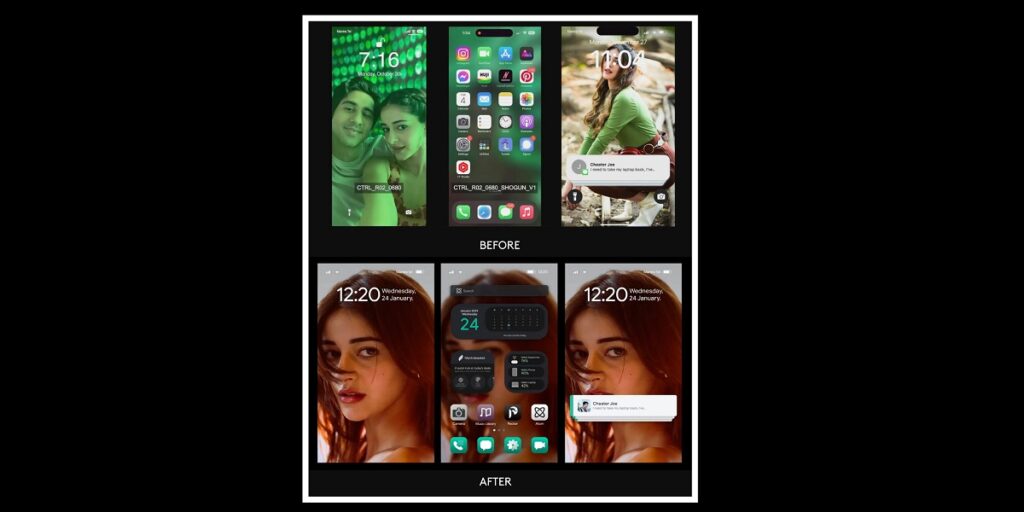

Kanani: Vikramaditya Motwane, a tech enthusiast, envisioned a visual language that was sleek, minimal, and perfectly aligned with UX/UI trends. Since we couldn’t use the actual popular apps as they are, it was essential to recreate them in a way that maintained strong recall value for the audience. The designs had to feel instantly familiar while still being unique enough to fit into the film’s universe. The goal was to ensure that the film’s visuals not only complemented the narrative but also reflected the sophistication of modern design aesthetics.

The story revolves around data privacy in the age of AI. Could you elaborate on creating the virtual avatar Allen.

Gaglani: The AI character Allen was already created during the shoot itself, laying the foundation for its integration into the film from the very beginning. But by the time the film transitioned from production to post, AI technology had advanced significantly, making it crucial for the visual effects to remain contemporary and on par with the latest innovations. So once the AI trend caught on, we realised that the earlier version of Allen no longer felt cutting-edge. To align with the evolving standards, we revisited his design and focused on enhancing every detail—skin, hair, textures, and even the glisten in his eyes.

The director had a clear vision for Allen; he wanted the character to look distinctly AI but not entirely photorealistic. The goal was to capture the essence of today’s AI, complete with subtle imperfections and minor glitches, rather than portraying a futuristic, flawless version. This approach added authenticity and aligned perfectly with the film’s narrative.

Have you incorporated AI while creating the visuals for this film?

Bhanushali: Certain AI tools played a key role in the VFX RPP (Roto Prep and Paint) work for the film. We primarily leveraged machine learning to streamline specific processes. Given that the film’s narrative revolved around AI, it was essential for us to deeply understand and explore various AI applications. From research and referencing to harnessing machine learning for optimisation, AI became an integral part of our workflow, ensuring the film stayed true to its theme while pushing the boundaries of technology in visual storytelling.

You used motion capture without traditional equipment, relied only on an iPad, and implemented live linking with on-screen integration. What were the unique challenges and advantages of this approach?

Gaglani: The idea was to have a dynamic and on-the-go setup which would give results that are close to a traditional setup. Traditional setup of having the HMC (helmet mounted camera) suits etc., would be tedious to handle; and getting it done within the timeline and with the space restriction that comes with many studios these days was a challenge. Hence we turned to using live link on iPhones and iPad, a process that uses its depth sensors and Lidar (light detection and ranging) technology to achieve amazing results.

We set up two iPads, one to capture the actors’ facial movements and later process them to get a refined result via metahuman animator, and another to set up a live link animation for the director to see the performance on screen and give his inputs to the actor. This gave us not only the creative freedom the director wanted but also the technical data that we would need to process and get good results .

This process, however, had its own technical challenges. Firstly, all data could not be captured, so the challenge was integrating the lost data with custom animated keys replacing them. Secondly, we had to build a rig to hold and mount two ipads/iphones, whilst also having the ability to have the live data transferred onto our servers since the data can be huge at times. Thirdly, in order to get the recording session perfect, we did the live linking whilst the dubbing was being done; so to sync with them and have both tasks achieved was great, but challenging.

The main advantage is that now we have a dynamic setup where we can have production quality quick setup mocap available on the go. It was also a great learning curve since the team understood how we can improvise and enhance the process further.

Which was the most challenging sequence in this film?

Gaglani: The deepfake sequence was hands-down the most challenging. Using an actual AI software would have saved time and delivered precise output, but due to legal restrictions on employing AI in commercial films, we had to create the entire sequence from scratch. Stitching the actor’s real monologue with the fake one was particularly tricky—it needed to look convincingly artificial while still fitting into the scene properly. In some places, we manually adjusted the actor’s lip movements to give it that unmistakable deepfake aesthetic. It was a meticulous process, but the result is a sequence we are incredibly proud of.

What are the next projects in the pipeline?

Bhanushali: We have an exciting slate of projects lined up – from a full-length animated feature film to a gripping series on an OTT platform, and even some ambitious live-action feature films.

The overall work done by philmCGI for Ctrl, depicts the need of VFX to deliver these kinds of stories. Creating a character which looks distinctly AI but not entirely photorealistic, is all about exploring technical innovations without compromising on the creative aspect.